Community

Photography through the Eyes of a Machine

By Lars - 7 min read

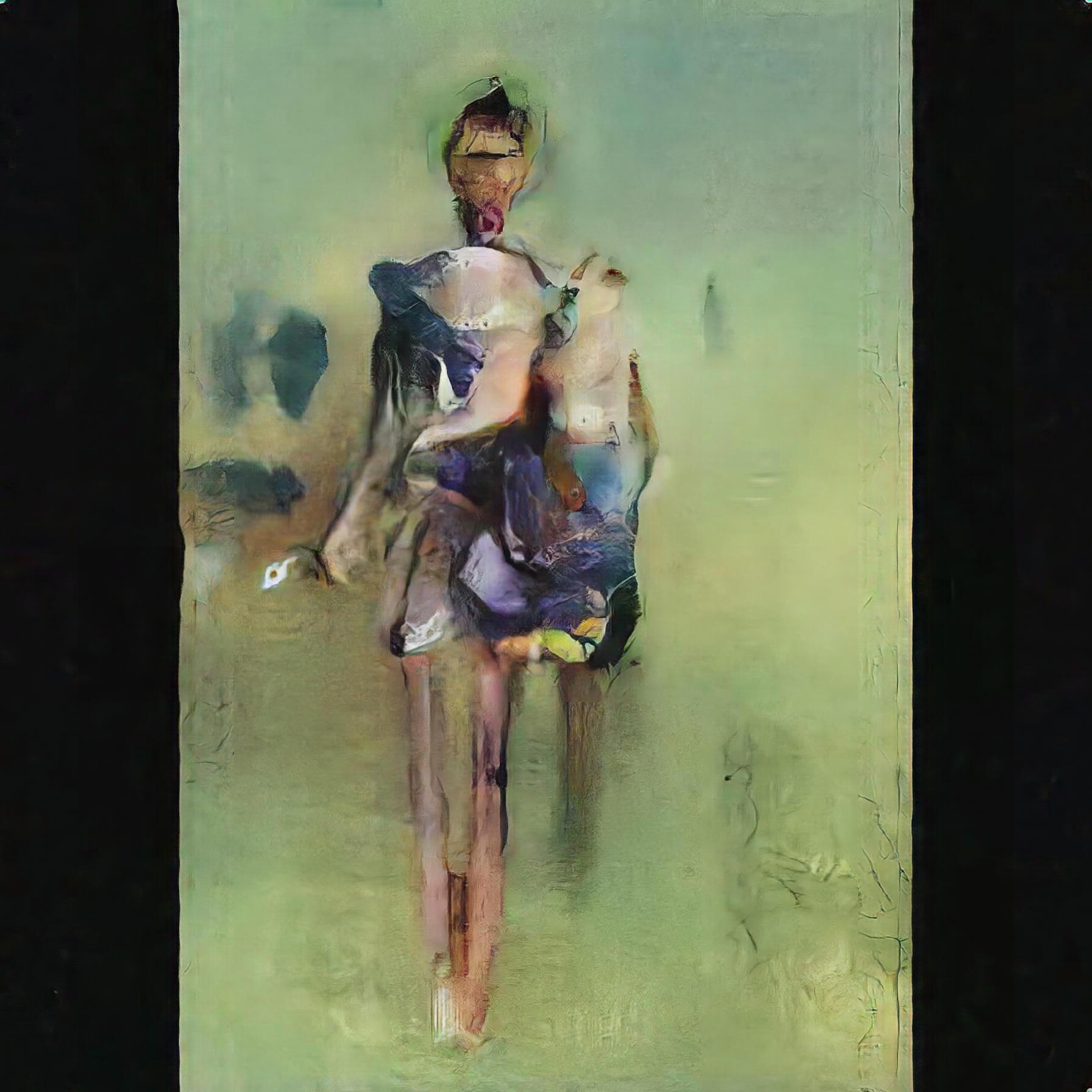

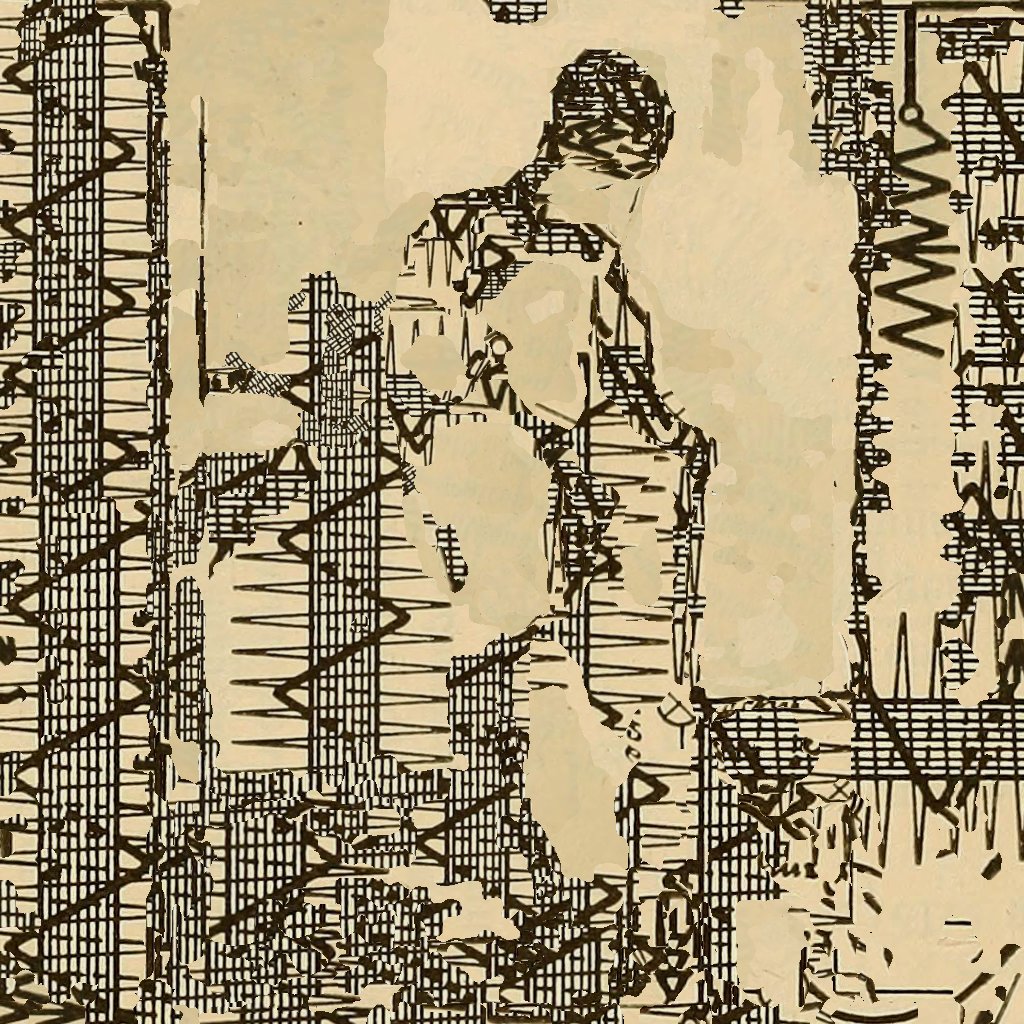

Mario Klingemann uses computers to reinterpret photography. A preview of the EyeEm Festival speaker’s unusual art.

Mario Klingemann is an unusual artist: He uses artificial intelligence to process photos and creates surreal new imagery in the process. Before he steps on stage at the EyeEm Festival, we asked him to answer some questions about his work.

Is it fair to say you’re using computers to create art—or are you teaching them to do it themselves?

So far, the machine is just my tool of choice for creating art. I try to design algorithms or train models in a way that gives them many degrees of freedom—but ultimately, whatever output they generate is still within the space of possibilities I defined. Nevertheless, one of my long-term goals is to reach a point where the computer is able to continuously evolve its own artistic personality.

What does that mean?

It would become able to truly surprise me, by creating work that is aesthetically interesting and different from anything I would expect.

What do you specialize in.

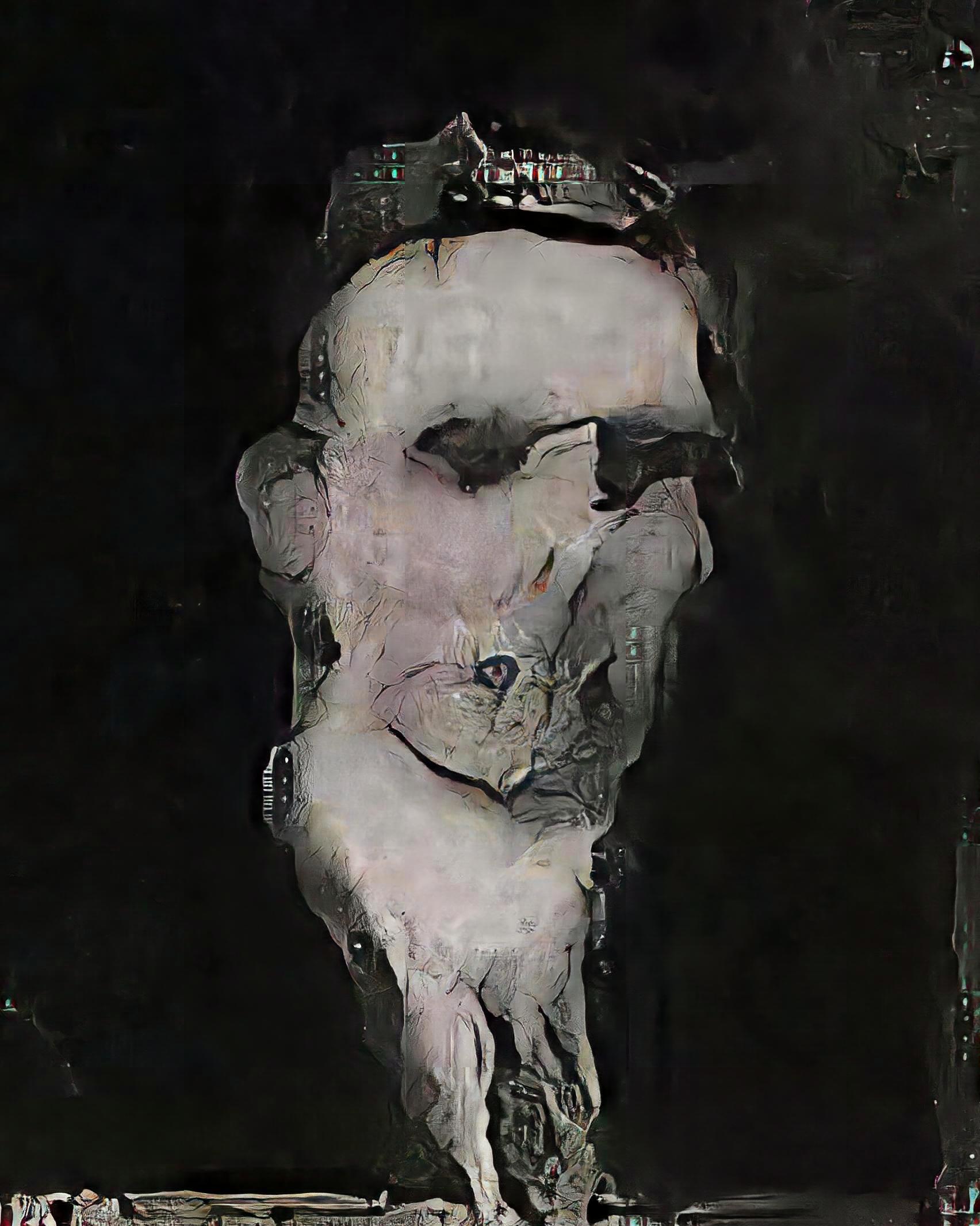

In “classic” generative art, you write a set of rules that produce an output, based on a few input parameters. That can be a visual, but also text or music. You feed these algorithms with random values and the machine will generate infinite variations—within the possibility space of your defined rules. It’s a fascinating way to create—one I have worked with for more than 20 years—but it’s hard to escape its very specific aesthetic.

What are you doing instead?

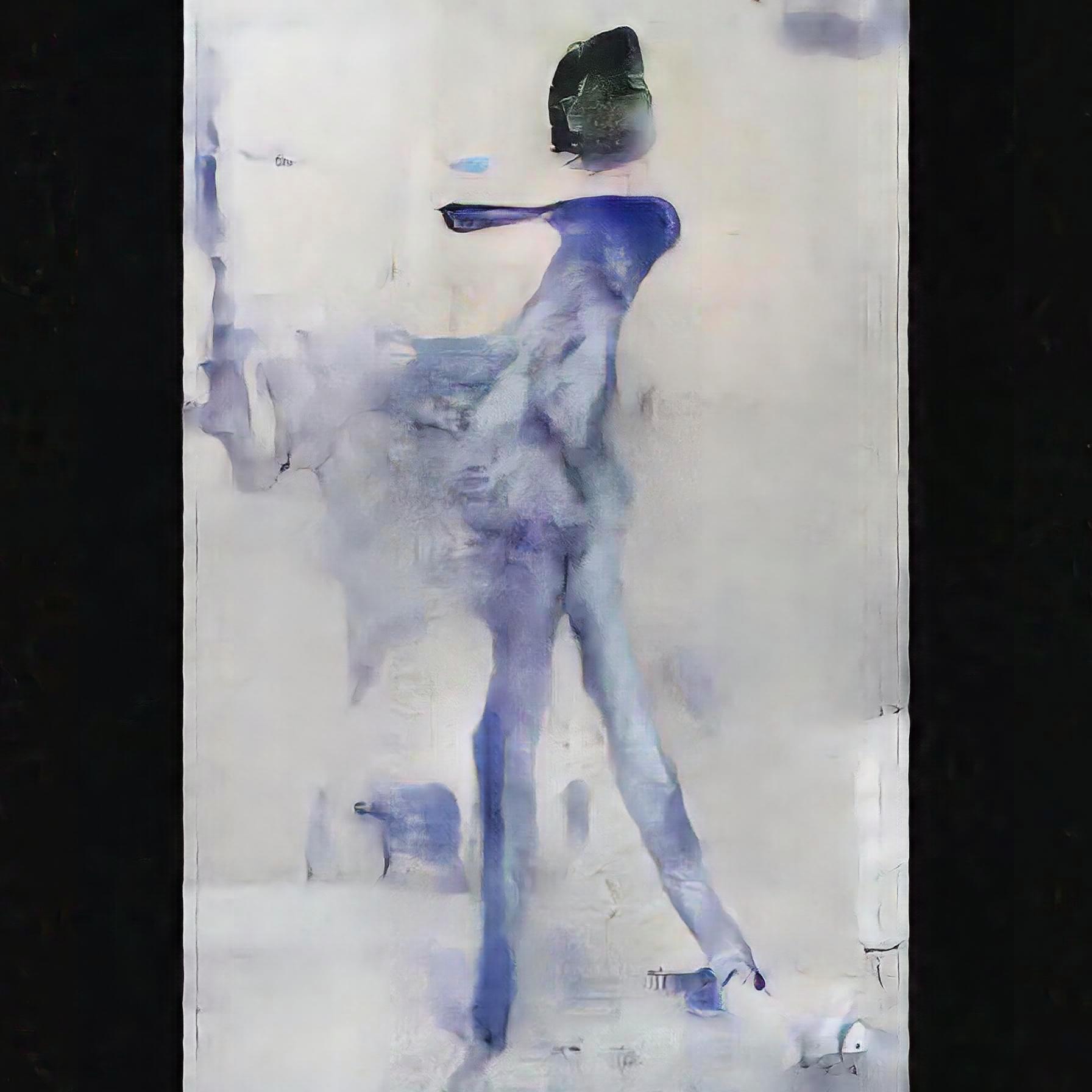

Advances in machine learning over the past years have opened up a very different way to create. It’s no longer rule-based or programmed, but a more complex way of transforming data. In simplified terms: When you train a neural network on certain sets of images, it tries to “understand” them by creating an internal representation called “latent space”. It condenses the relevant information from images into a few numbers. You can then project the abstract data back into the “real” world—but based on your model, the output can be very different from the input. You can compare it to having translated a sentence from German to English: The meaning of the sentence will remain the same but not only will it sound different, the words might also be in a new order.

It isn’t quite photography—what do you call it?

I call it “Neurography”, short for ”neural photography”. Like photographers frame the real world, I generate and explore virtual multidimensional latent spaces, looking for interesting viewpoints or motives.

On Twitter, you nevertheless talk dismissively about an “AI hype”. How come?

AI, short for artificial intelligence, has become an overused buzzword. It’s attached to anything and everything—I wouldn’t be surprised to come across a brand of AI yoghurt in the supermarket soon. What media headlines call “AI” is not what I would call it. Don’t get me wrong—deep learning has become very capable and fascinating, but in my opinion it still uses brute force rather than “intelligence”. Once we’ll have reached the point of actually creating artificial intelligence, that term will be worn out and we will have to come up with a new label. Maybe the AI of the future will be creative enough to find a good name.

How should we regard AI when it comes to the arts in general, and photography in particular?

Just like any other new technology, it can be a powerful tool to view the world from a different angle. It also can help to automate or augment certain tasks that were previously deemed exclusively “human”. Some people might see this as a threat, but technological progress has always worked this way and fortunately we are a very adaptive species. The sad truth is that if something can be done faster or cheaper, it usually will be done that way, even if some of the finer details or exquisite craftsmanship are lost in the process. The good news is that some people will still value the “old” way of doing things, so there will always remain a niche for those who are good at something and don’t want to go with the flow.

As with any new technology, we’re in an early phase where the tool itself is the center of attention. That’s when the low-hanging fruits are harvested and where one can still make oneself a name as a “pioneer”, just by being in the game early. This is where we are now in AI. But this phase will fade and deep learning will become just another tool for anyone to use. Then we’ll see who does so in artful ways, who finds unique ways of expression. Everybody owns a camera today, but not everybody creates “art” with it. In a few years, everybody will have intelligent assistants for creative tasks in their pocket. Those assistants will raise and normalize the overall aesthetic output that we will see day by day, but since that kind of aesthetic will have become a new normal, people will find new ways to stand out from the crowd.

Your work seems to show that computers can help bring out human creativity. How exactly?

I’m of the unpopular opinion that true imagination does not exist. Our brains are not capable of creating something from nothing. Obviously, we are great at combining knowledge and ideas that we have observed or learned during our life and to transform them into something different. But in order to do that, we first have to have seen, heard or read raw information somewhere else. Unless you are into mind-altering substances, it’s very hard to make your brain work outside its specifications— that’s why most people come up with the same kind of non-original solutions for problems. Creativity is the art of finding the non-obvious combinations of concepts or domain transfers among the many possibilities.

That’s where I see computers and AI coming in to help us. Machines aren’t bound by genetically evolved rules like our brains are. They can escape the beaten paths we tend to think in, since we can force it to ignore the obvious. This can enable it to try out the strangest of combinations, most of which will neither be interesting nor make sense, but being a machine it can try and try again and unlike humans it will not forget what it tried already or attempt the same approach over and over. Then it can present the more promising results of that search back to us and we can decide if there is maybe something to some of them and, if yes, make them part of our own repertoire of ideas, a process also known as “inspiration”.

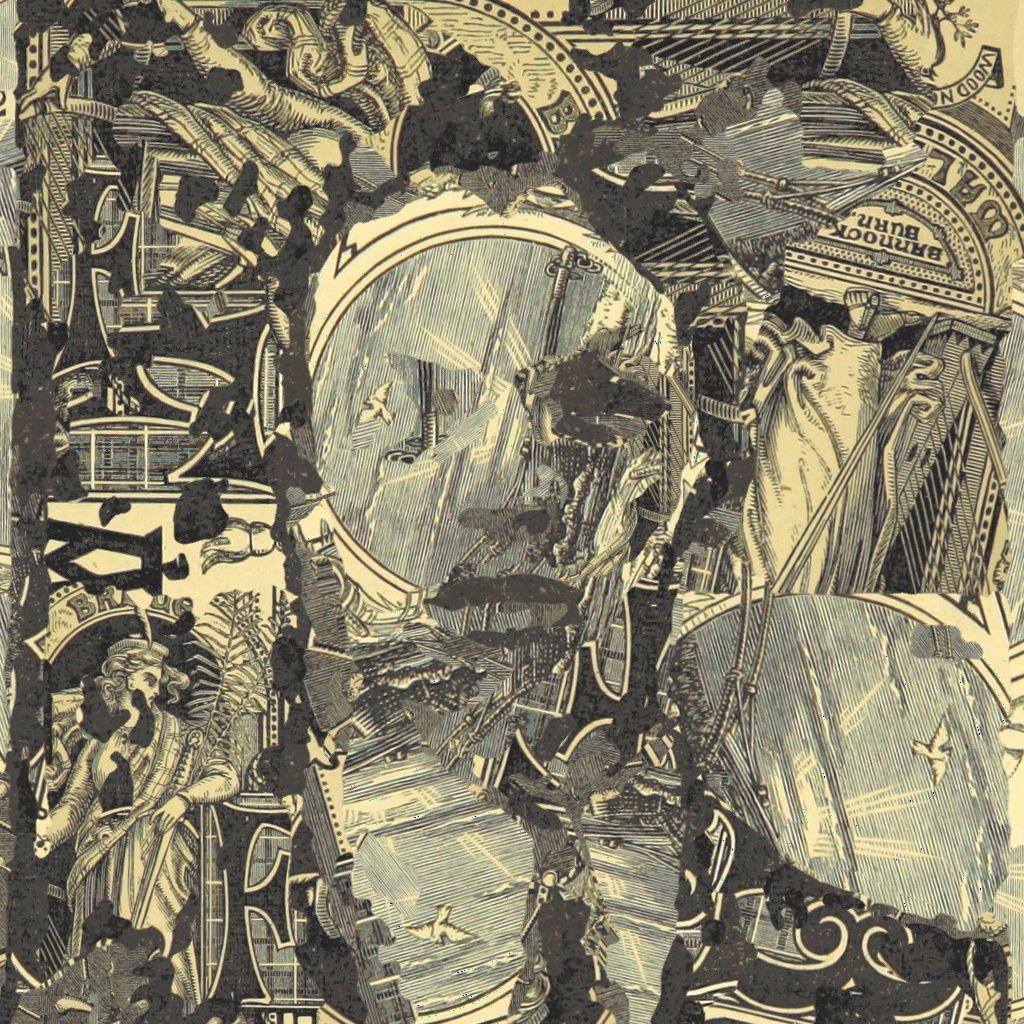

You’re the artist in residence at Google Arts & Culture. What does that entail?

The Google Cultural Institute wants to help make the cultural treasures of the world accessible and discoverable for everyone. It does that by supporting museums, collections or other cultural institutions in digitizing their artifacts and making them available from anywhere. But when you have millions of cultural artifacts the question becomes how to find what you’re looking for—or, even better, how do you find what you might not even know you were looking for? That is where the lab comes in where I’m currently in residence together with a brilliant group of other artists and engineers. Using deep learning and other technologies like VR and AR, we try to find new and interesting ways to explore and experience this cultural heritage.

In practice that means building prototypes, that might turn into experimental interfaces, like the ones you can find here or it can result in an installation like my “X Degrees of Separation” which has been shown in various museums around the world and will be presented at Ars Electronica next week.

What can we expect from your talk at the EyeEm Festival?

Everything I said above, but with more pictures.